This is an old revision of the document!

GRAY8 vs RTP

problem:

single plane raw formats are not part of rfc4175, and as such 2nd class citizens to gstreamer, RTSP and anywhere.

solution:

a missing channel in any image format is just a convention, we can package a datastream as long as we mind our strides.

view the image format as just a stream of octets. here is our stream in Y8 format

+--+--+--+--+ +--+--+--+--+

|Y1|xx|xx|xx| |Y1|xx|xx|xx| ...

+--+--+--+--+ +--+--+--+--+

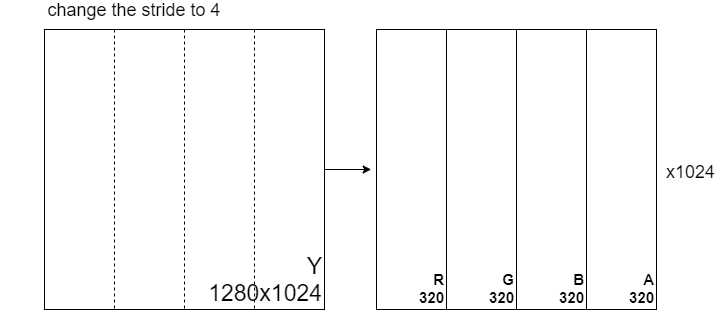

and again, data quartered and represented in 8 bit depth and with stride of 4

+--+--+--+--+ +--+--+--+--+

|R0|G0|B0|A0| |R1|G1|B1|A1| ...

+--+--+--+--+ +--+--+--+--+

map the GRAY8 one plane source into a quarter width four plane RGBA output image

we will then remap it to Y8 on the receiving side.

the trick is to use gstreamer built in format design document and let it handle the format conventions.

the hammer

as easy as

gst-launch-1.0 -q \ videotestsrc pattern=white num-buffers=1 \ ! video/x-raw, format=GRAY8, width=4, height=4 \ ! identity dump=1 \ ! rawvideoparse format=rgba width=1 height=4 \ ! identity dump=1 \ ! fakesink 00000000 (0x7f369c00a8c0): eb eb eb eb eb eb eb eb eb eb eb eb eb eb eb eb ................ 00000000 (0x556228700560): eb eb eb eb eb eb eb eb eb eb eb eb eb eb eb eb ................

note: why white pixel is not FF=(255)?

gstreamer uses YUV colorimetry when generating a GRAY8 test image

and YUV doesnt use the entire 0-255 space. issue

FakeRawPay

gray8 raw video transported as RTP payload via a “fake” rgba parsing step

conforming to RFC4157

pay

gst-launch-1.0 -v \ videotestsrc pattern=white is-live=1 \ ! video/x-raw, format=GRAY8, width=4, height=4, framerate=30/1 \ ! rawvideoparse width=1 height=4 format=rgba \ ! rtpvrawpay \ ! queue \ ! udpsink host=localhost port=5000

depay and back to gray8

gst-launch-1.0 -v \ udpsrc port=5000 \ ! "application/x-rtp, media=(string)video, clock-rate=(int)90000, encoding-name=(string)RAW, sampling=(string)RGBA, depth=(string)8, width=(string)1, height=(string)4, colorimetry=(string)SMPTE240M, payload=(int)96" \ ! rtpvrawdepay \ ! queue \ ! rawvideoparse width=4 height=4 format=gray8 \ ! videoconvert \ ! fpsdisplaysink

strides and offsets

we dont need to dig into the following as we use the capabilities as listed in design document.

but hopefully this section will advance your understanding.

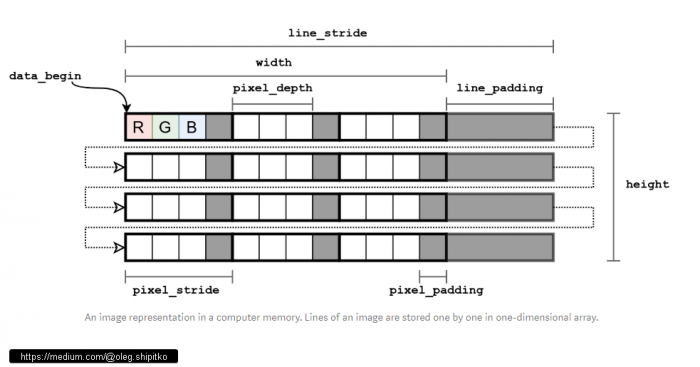

pstride only exist for simple pixel formats. It represent the distance in bytes between two pixels. This value, when it exists, is constant for a specific format.

rtstride (row stride), is the size, in bytes, of one [image] line. In general, it must be larger or equal to pstride * width, when pstride exist for your format. This design document outline the calculation of the default strides and offsets [channels\planes] within the libgstvideo library. Notice the use of RU4 or RU2, which are round up, these are used to ensure the each row starts on an align pointer. This simplify memory access in software processing.

When you use VAAPI, you are using some HW accelerators. These accelerators have wider memory alignment requirement, hence the end results is that strides will be larger, and sometimes there will also be couple of padding rows. question

GRAY8

8-bit grayscale “Y800” same as “GRAY8”

| Component | color | depth | pstride | offset | rstride | size |

|---|---|---|---|---|---|---|

| 0 | Y | 8 | 1 | 0 | width | rstride*height |

+--+--+--+--+ +--+--+--+--+ |Y0|x |x |x | |Y1|x |x |x | ... (two pixel representation, in octet)) +--+--+--+--+ +--+--+--+--+

RGBA

rgb with alpha channel last (big-endian)

| Component | color | depth | pstride | offset | rtsride | size |

|---|---|---|---|---|---|---|

| 0 | R | 8 | 4 | 0 | width*4 | rstride * height |

| 1 | G | 8 | 4 | 1 | ||

| 2 | B | 8 | 4 | 2 | ||

| 3 | A | 8 | 4 | 3 |

+--+--+--+--+ +--+--+--+--+ |R0|G0|B0|A0| |R1|G1|B1|A1| ...(two pixel representation, in octet) +--+--+--+--+ +--+--+--+--+

fourcc on linux