Table of Contents

live

<html> <p><a href=“https://www.haivision.com/products/srthub/?utm_medium=CTA&utm_term=SRTHub&utm_content=Sep%2B4&utm_campaign=Blog&wvideo=g6wyi820rt”><img src=“https://embed-fastly.wistia.com/deliveries/2503e91f43c10e1b427c51faf01e0f80.jpg?image_play_button_size=2x&image_crop_resized=960x540&image_play_button=1&image_play_button_color=0072cee0” width=“400” height=“225” style=“width: 400px; height: 225px;”></a></p><p><a href=“https://www.haivision.com/products/srthub/?utm_medium=CTA&utm_term=SRTHub&utm_content=Sep%2B4&utm_campaign=Blog&wvideo=g6wyi820rt”>SRT Hub Cloud Based Media Routing | Haivision</a></p> </html>

HLS

gstreamer/video.js

the easiest stream i got was using hlssink

gst-launch-1.0 videotestsrc is-live=true ! x264enc ! mpegtsmux \ ! hlssink playlist-root=https://your-site.org \ location=/srv/hls/hlssink.%05d.ts \ playlist-location=/srv/hls/playlist.m3u8

and video.js on the other site

<video-js id=vid1 width=600 height=300 class="vjs-default-skin" controls> <source src="https://example.com/index.m3u8" type="application/x-mpegURL"> </video-js> <script src="video.js"></script> <script src="videojs-http-streaming.min.js"></script> <script> var player = videojs('vid1'); player.play(); </script>

pipe video from rtsp ueye

gst-launch-1.0.exe rtspsrc location=rtsp://169.254.37.87:8554/mystream ! fakesink ! fpsdisplaysink

Javelin

install rust on server

curl https://sh.rustup.rs -sSf | sh source $HOME/.cargo/env

you'll probably need a few deps, like

sudo apt install libssl-dev

cargo install javelin # add an user with an associated stream key to the database javelin --permit-stream-key="idiotstream:cc144117a8fe59af0f7f34ce9eca8f05" --rtmp-bind 46.101.213.56 --http-bind 46.101.213.56 # start the server if not running javelin run

couldn't get server/client to access 8080 to see the stream . trying the docker-compose way

streamline

opencv>ffmpeg>hls

optimizing

Shaka

https://github.com/google/shaka-streamer

https://github.com/google/shaka-packager

https://google.github.io/shaka-packager/html/index.html

jsmpeg

JSsip

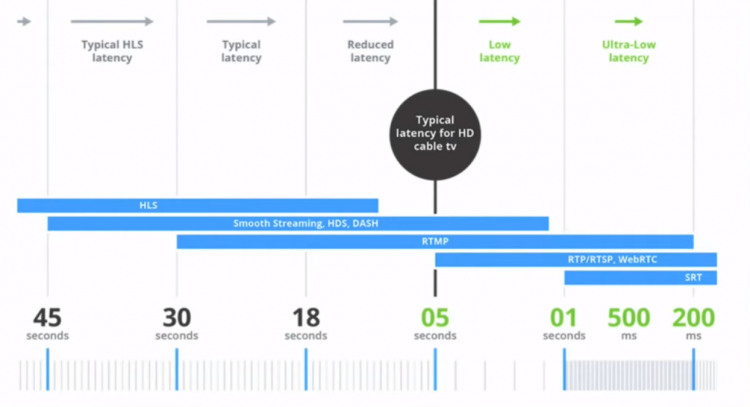

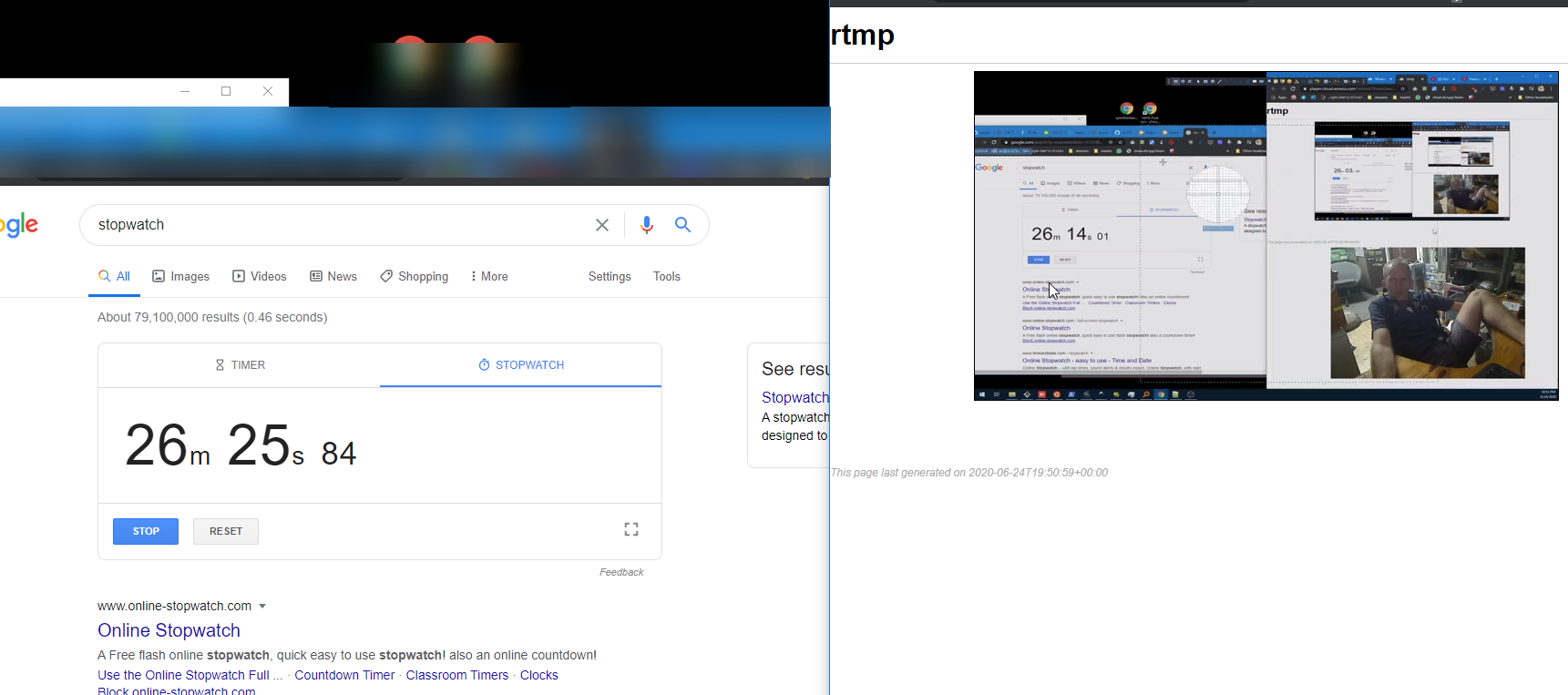

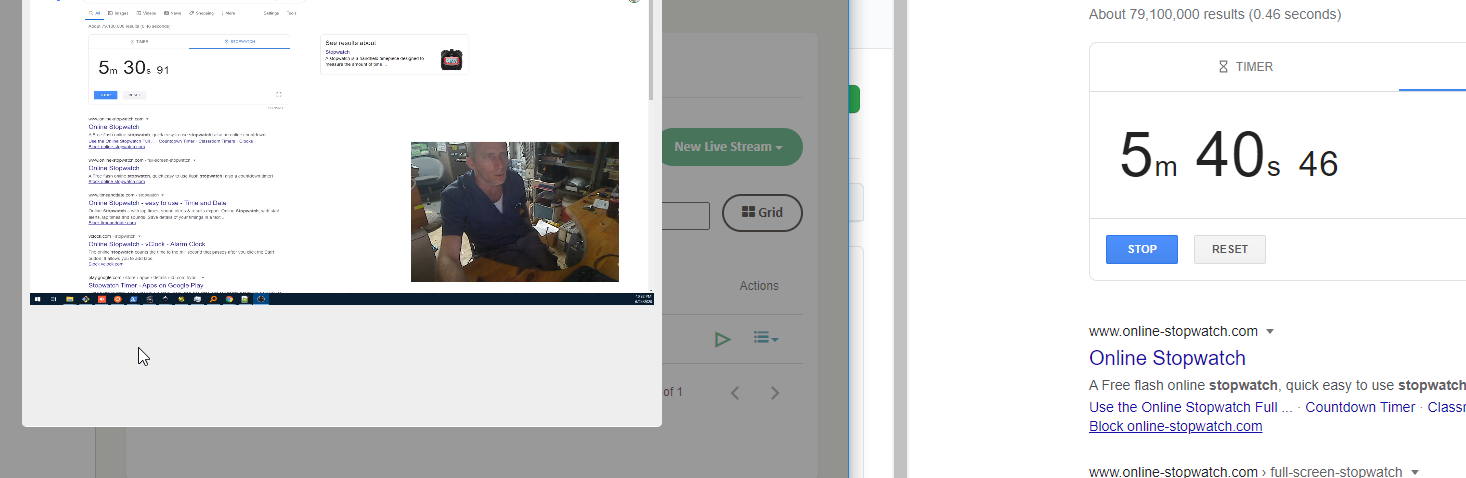

SRT

https://www.haivision.com/blog/all/rtmp-vs-srt/ – > haivision_white_paper_rtmp_vs_srt_comparing_latency_and_maximum_bandwidth.pdf

https://github.com/Haivision/srt/blob/master/docs/why-srt-was-created.md

UDP based transport. not used to broadcast to many. low latency and packet recovery between two points.

start the srt + server

https://github.com/Haivision/srt cd srt && ./configure && make ./srt-live-transmit udp://:1234 srt://:4201 -v

start a producer using ffmpeg lavfi fakesource

ffmpeg -f lavfi -re -i testsrc=duration=300:size=1280x720:rate=30 \ -pix_fmt yuv420p -c:v libx264 -b:v 1000k -g 30 -keyint_min 120 -profile:v baseline -preset veryfast \ --f mpegts "udp://127.0.0.1:1234?pkt_size=1316"

watch on vlc

srt://127.0.0.1:4201

http://en.kiloview.com/product/E2-H.264-HDMI-to-IP-Wired-Video-Encoder-59.html

servrs providers

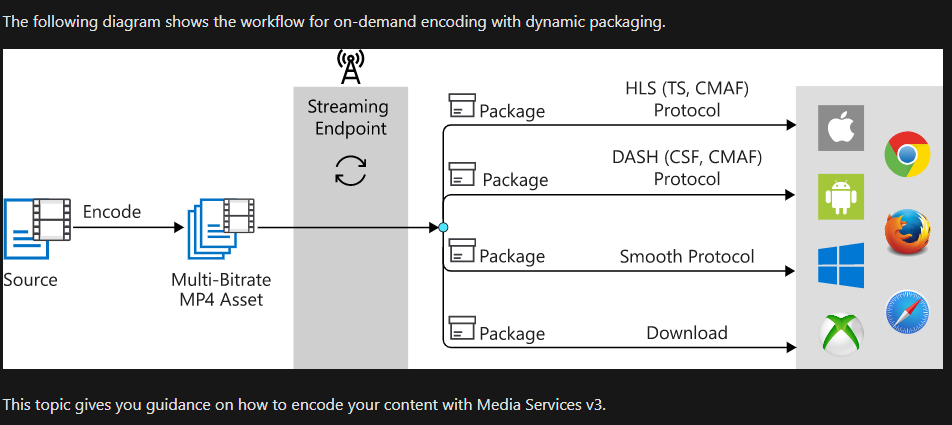

AZURE

does support SRT, unlike aws. with thier end of life RTMP coming iin 2021

https://azure.microsoft.com/en-us/blog/what-s-new-in-azure-media-services-video-processing/

AWS

Kinesis

Q: What’s the difference between Kinesis Video Streams and AWS Elemental MediaLive?

AWS Elemental MediaLive is a broadcast-grade live video encoding service. It lets you create high-quality video streams for delivery

to broadcast televisions and internet-connected multiscreen devices, like connected TVs, tablets, smart phones, and set-top boxes.

The service functions independently or as part of AWS Media Services.

Amazon Kinesis Video Streams makes it easy to securely stream video from connected devices to AWS for real-time and batch-driven machine learning (ML),

video playback, analytics, and other processing. It enables customers to build machine-vision based applications that power smart homes,

smart cities, industrial automation, security monitoring, and more.

elemental

medialive

https://docs.aws.amazon.com/medialive/latest/apireference

pricing is based on input/ouput encoding setiings > https://aws.amazon.com/medialive/pricing/

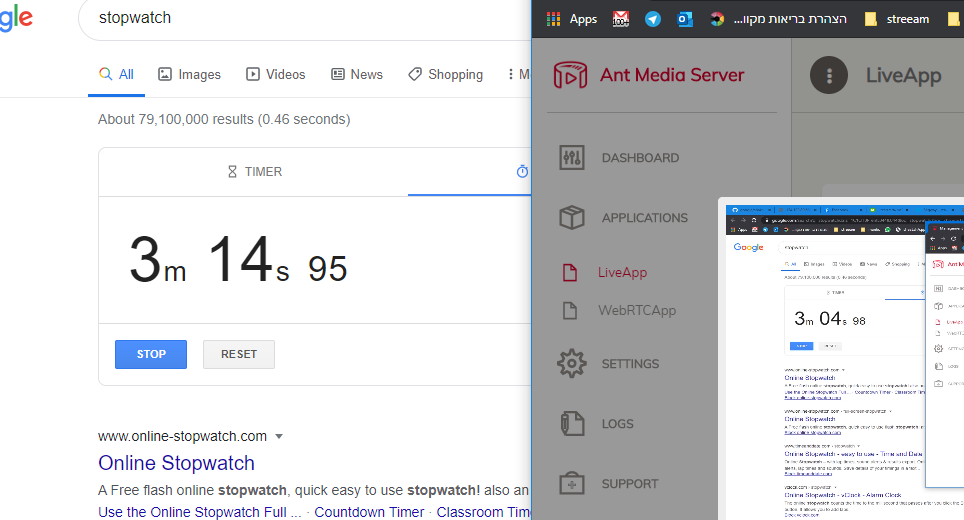

ANTMEDIA

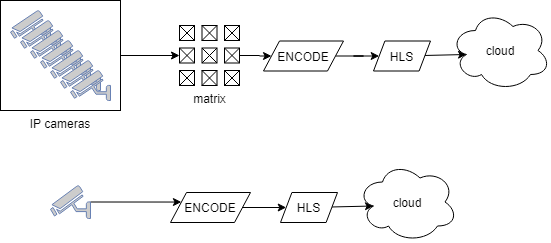

- pull RTSP streams from IP Cameras easily and it can be configured on the management panel. wiki

- pull live streams from external sources wiki

pricing > https://antmedia.io/#selfhosted png

Hardware

teradek

axis

china

questions

where are most clients?

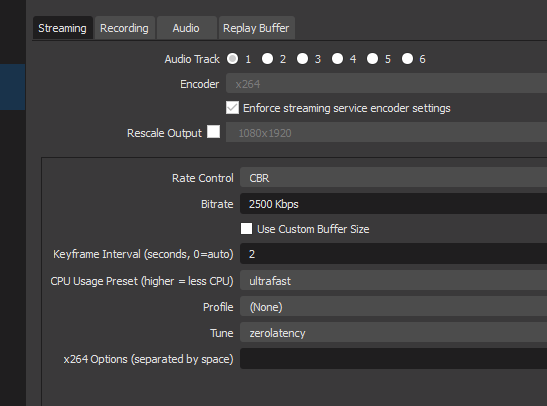

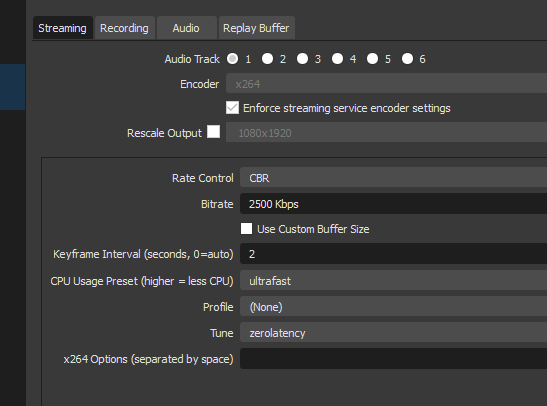

OBS

NEws

scenario